You can see it in action here or jump straight into using it by following this link. The diagram below shows the gist of the idea.

I’ve used this approach before when I built Staticman and it worked really well. All this comes for free, if you’re okay with using a public repository.Īs for #1, I built a small Node.js application that receives test requests, sends them to WebPageTest, retrieves the results and pushes them to a GitHub repository as data files, which will then be picked up by the visualisation layer. You get a fast and secure hosting service, with the option to use a custom domain. On top of that, GitHub Pages makes the same repository a great place to serve a website from. With GitHub’s API, you can easily read and write files from and to a repository, so you can effectively use it as a persistent data store. It may seem like an unusual approach, but GitHub is actually a pretty interesting choice to achieve #2 and #3. I really wanted to build something that people of all levels of expertise could set up and use for free, and that heavily influenced the decisions I made about the architecture and infrastructure of the platform.

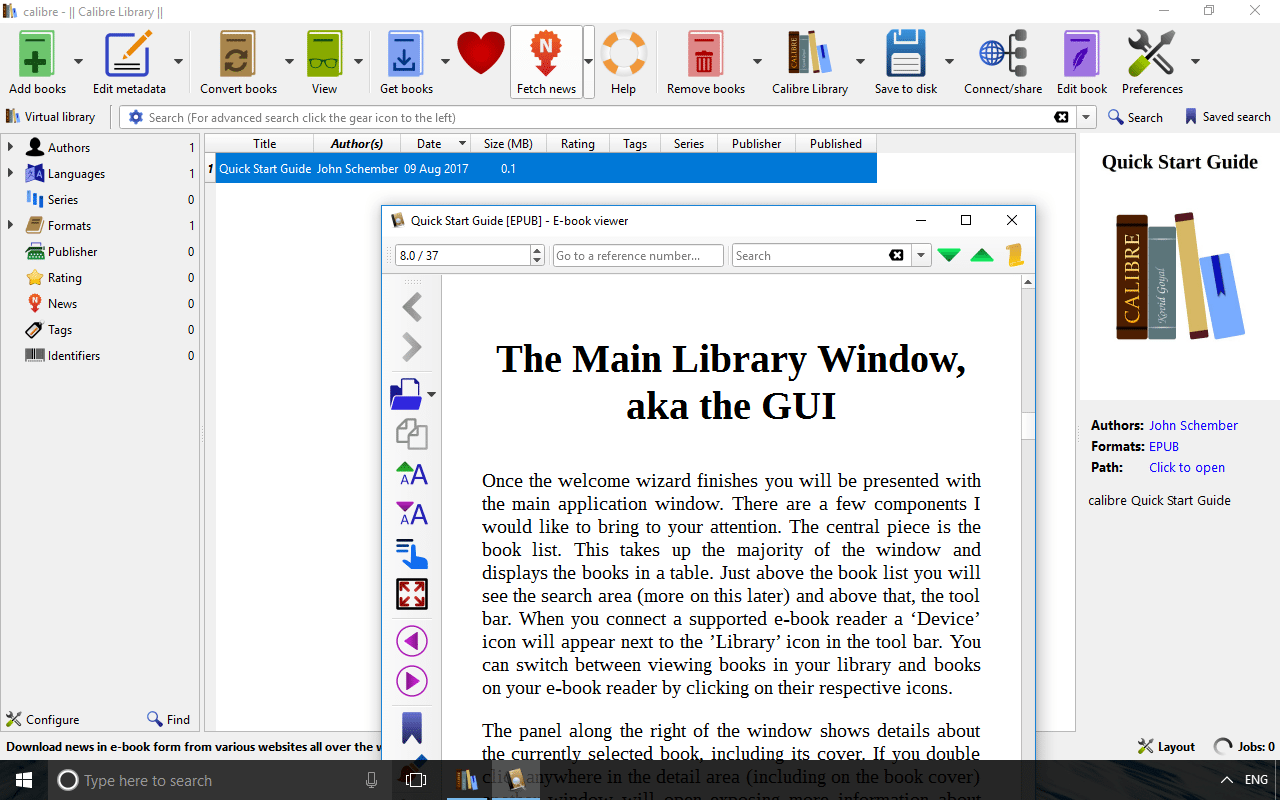

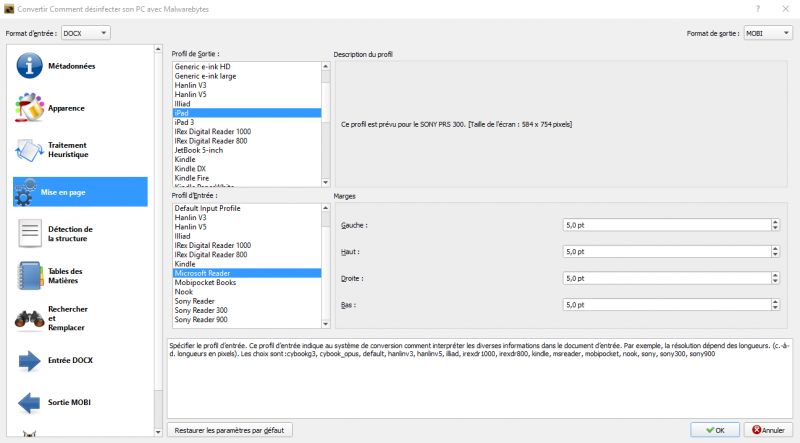

#Calibre monit file series

#Calibre monit file free

The tool I created and that I’ll introduce to you during the course of this article is a modest and free alternative to those services. They use private instances of WebPageTest and don’t rely on the public one, which means no usage limits and no unpredictable availability.

#Calibre monit file professional

Companies like SpeedCurve or Calibre offer a professional monitoring tool as a service that you should seriously consider if you’re running a business.

Testing multiple URLs, with the ability to configure different test locations, devices and connectivity types.Running recurrent tests with a configurable time interval.Running tests manually or have them triggered by a third-party, like a webhook fired after a GitHub release commit.I set out to create a tool that allowed me to compile and visualise all this information, and I wanted to build it in a way that allowed others to do it too.

This means being able to see how the load time of a particular page is affected by new features, assets or infrastructural changes. Now that we have the ability to obtain performance metrics programmatically through the RESTful API, we should be looking into ways of persisting that data and tracking its progress over time. Unarguably, the data it provides can translate to precious information for engineers to tweak various parts of a system to make it perform better.īut how exactly does this tool sit within your development workflow? When should you run tests and what exactly do you do with the results? How do you visualise them? A couple of months ago I wrote about using WebPageTest, and more specifically its RESTful API, to monitor the performance of a website.

0 kommentar(er)

0 kommentar(er)